Kaggle DL Courses

sklearn

结构化数据预处理:数字数据 + 类别数据

注意:为避免Group Leak,这里按演唱者(artists)划分训练集、验证集

1 | import pandas as pd |

DL

layers, activations, losses, optimizers, callbacks, metrics

keras.Sequential → model.compile → model.fit → model.predict/model.evaluate

模型构建:网络层(layers)与激活函数(activations)

1 | from tensorflow import keras |

模型训练:损失函数(losses)与优化策略(optimizers)

1 | # tf.keras.losses: MeanAbsoluteError |

提前终止:欠拟合、过拟合

输入数据包含信号和噪声信息,无论模型学习到了信号还是噪声的模式都有助于降低训练的损失,但从噪声学到的模式无助于应对新的数据(不能泛化)。未获取足够信号模式表现为欠拟合,而学习了过多噪声信息则对应过拟合,通过对比训练集和验证集损失,有助于判断模型拟合情况。

提前终止EarlyStopping类位于Keras的回调模块(tf.keras.callbacks)。这里所谓“回调”是指在模型训练时调用的函数,通过模型训练fit函数的callbacks参数传入,并在模型训练时进行调用,用于控制/分析训练过程。Keras默认提供了一系列回调函数,也可通过LambdaCallback类自定义简单的回调函数。

1 | early_stopping = tf.keras.callbacks.EarlyStopping( |

特殊网络层

除了全连接Dense、卷积Conv2D等包含神经单元的通常网络层外,还有一些不含神经单元、实现特殊功能的网络层,如随机失活(Dropout)、批归一化(BatchNormalization)。随机失活在某种程度上可理解为众多小网络的集成学习,提升了整体结果鲁棒性;而批归一化则有助于缓解训练的不稳定性,提升训练速度。

Dropout层加在要失活的网络层之前,而BatchNormalization可用于激活后的特征,也可用于激活前(将激活函数拆出单独做一层),甚至作为第一层用于输入数据。注意,这些特殊网络层无需指定单元数(不含神经元)。

1 | layers.Dropout(rate=0.3) |

二分类问题

分类的准确率是离散分布,而损失函数需要是连续的 → 交叉熵(度量分布间差异)。虽然损失函数不能用准确度,但我们可能还是希望通过准确度更直观的判断模型表现,此时可通过度量指标metrics参数指定模型评价标准。

- 不同于Loss,Metric不用于模型优化,仅用于评估模型表现;

- Metric可以是任何自定义函数,由

compile的metrics参数传入; - 不同于Callback,Metric是作为

compile的参数传入,而非fit的参数; - Loss及所有Metric会在每个epoch结束计算,并保存于history.history的字典中,Metric会以函数名为关键字,当有验证集时会有相应的’val_xxx’项;

- EarlyStopping(callback)可指定检测标准,默认为验证集的损失(‘val_loss’),可设定为训练集损失(‘loss’),以及训练集或验证集上的其他任何度量函数取值。

1 | model.compile( |

- Create Your First Submission

- Cassava Leaf Disease

- Classify images with TPUs in Petals to the Metal

- Create art with GANs in I’m Something of a Painter Myself

- Classify Tweets in Real or Not? NLP with Disaster Tweets

- Detect contradiction and entailment in Contradictory, My Dear Watson

CV

图片预处理

1 | ds_train_ = image_dataset_from_directory( |

迁移学习

1 | pretrained_base = tf.keras.applications.VGG19( |

数据增强

数据增强通常在训练时执行(online),数据在输入网络前先执行一个随机变换(旋转、翻转、扭曲、调整颜色/对比度等),使模型每次看到都是“新”数据。但需要注意针对特定问题,不是所有变换都有用或合理,而寻找合适增强变换最好的途径就是尝试。

在Keras中数据增强可以融入数据输入的Pipline,如ImageDataGenerator函数,也可以借助预处理层融入网络结构,后一种方式好处是可以自动运行在GPU上。

1 | # Reproducability |

网络层

-

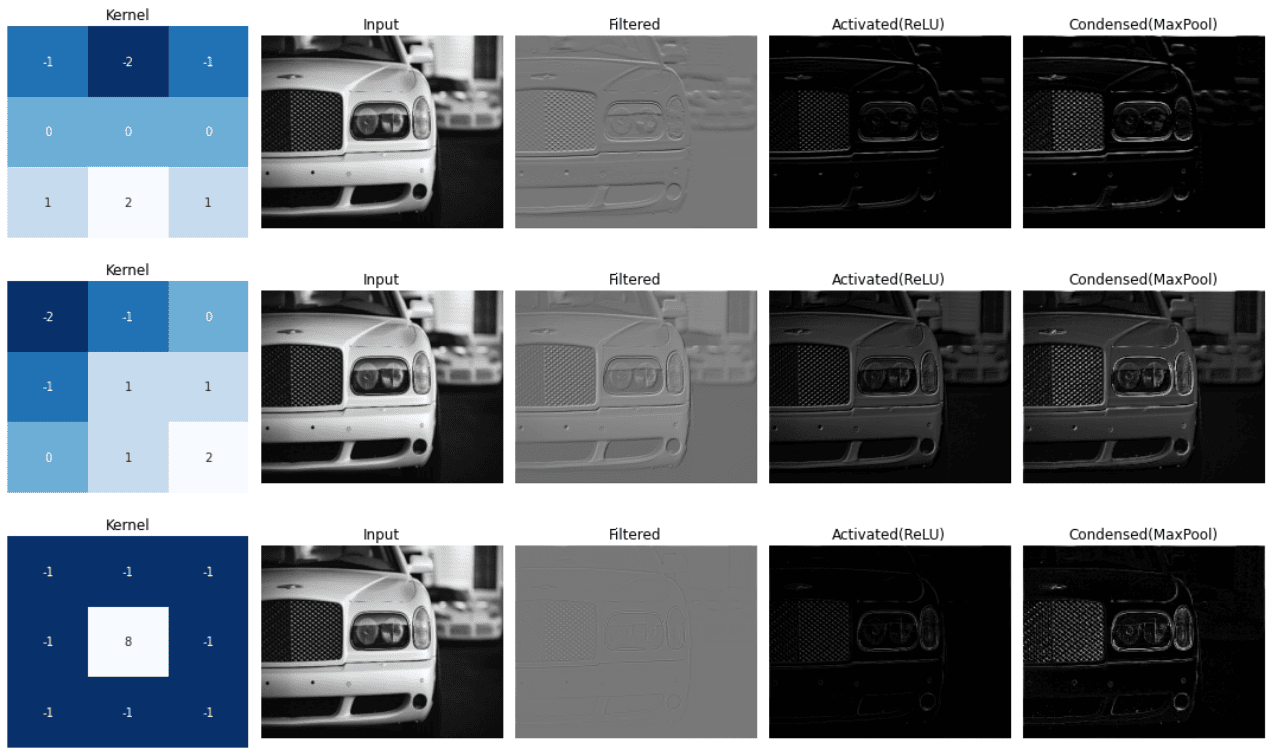

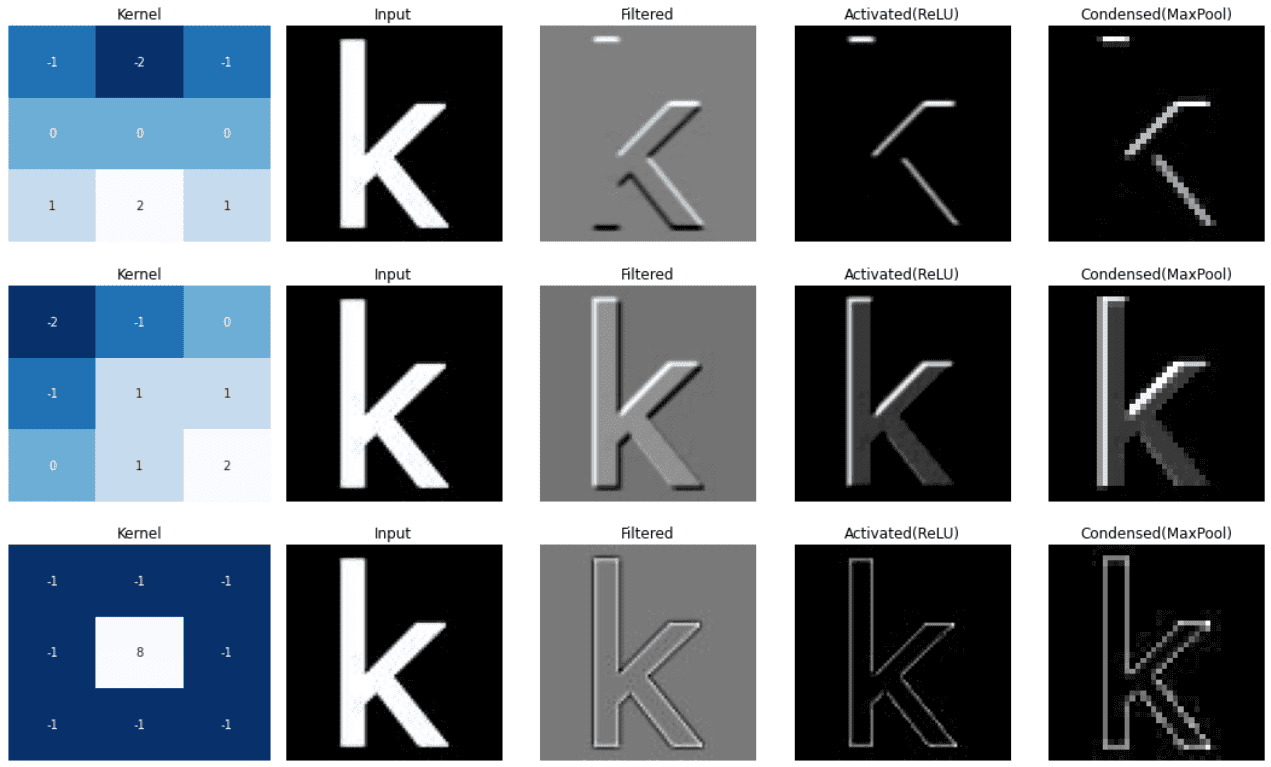

卷积层:针对特定特征(卷积核)进行图像滤波

layers.Conv2D

layers.Conv2D有两个基本参数filters,kernel_size(对应全连接的units),分别为卷积核的数目和大小,其中卷积核大小通常会取3、5等奇数(有明确中心)。

另外两个关键参数为步长(strides)和填充(padding),对应卷积核的单次移动距离以及输入图像的边缘处理。输出特征图尺寸 = (输出尺寸 + 两侧填充尺寸 - 卷积核尺寸)//步长 + 1。步长通常取1,填充可选择不填充(‘valid’),此时输出特征图尺寸会逐渐缩减,或者四周补0,保持输出特征图尺寸(‘same’)。 -

ReLU激活:从滤波后图像中检测特征,并输出特征图

Everything unimportant is equally unimportant. -

池化层:压缩特征图以凸显特征(最大池化)

layers.MaxPool2D,layers.GlobalAvgPool2D

经过ReLU激活后,负向特征都变为0,池化操作可以移除这些“无效”信息。另一方面,这些零值虽然与感兴趣的特征无关,但其实包含着位置信息,因此池化操作还引入了局域的平移不变性。(通常取strides大于1,小于窗口自身大小)

除了常见的最大池化,输出层附近还常使用全局平均池化(GlobalAvgPool)代替展开(Flatten)或全连接(Dense)。全局平均池化以平均值代表整个特征图((batch_size, rows, cols, channels)→(batch_size, channels)),可理解为通过一个值指示某个特征存在与否,对于分类而言通常足够了,可以显著减低参数数量,避免过拟合。

1 | import tensorflow as tf |

Three (3, 3) kernels have 27 parameters, while one (7, 7) kernel has 49, though they both create the same receptive field. This stacking-layers trick is one of the ways convnets are able to create large receptive fields without increasing the number of parameters too much.

NLP

https://www.kaggle.com/getting-started/161466

https://www.kaggle.com/product-feedback/299376

NLP Problems

NLP Problems

NLP for Beginners

Best of Kaggle Notebooks #4. - Natural Language Processing

Best of Kaggle Notebooks #6 - CNNs, LSTMs, GRU, AutoEncoder, Tabnet, UNet

Best of Kaggle Notebooks #7 - HyperParameter Optimization Tools